Guided Real Image Dehazing using YCbCr Color Space

AAAI 2025

- Wenxuan Fang1

- Junkai Fan1

- Yu Zheng1

- Jiangwei Weng1

- Ying Tai2

- Jun Li*1

-

1School of Computer Science and Engineering, Nanjing University of Science and Technology

2Intelligence science and technology, Nanjing University

Abstract

Image dehazing, particularly with learning-based methods, has gained significant attention due to its importance in real-world applications. However, relying solely on the RGB color space often fall short, frequently leaving residual haze. This arises from two main issues: the difficulty in obtaining clear textural features from hazy RGB images and the complexity of acquiring real haze/clean image pairs outside controlled environments like smoke-filled scenes. To address these issues, we first propose a novel Structure Guided Dehazing Network (SGDN) that leverages the superior structural properties of YCbCr features over RGB. SGDN comprises two key modules: Bi-Color Guidance Bridge (BGB) and Color Enhancement Module (CEM). BGB integrates a phase integration module and an interactive attention module, utilizing the rich texture features of the YCbCr space to guide the RGB space, thereby recovering clearer features in both frequency and spatial domains. To maintain tonal consistency, CEM further enhances the color perception of RGB features by aggregating YCbCr channel information. Furthermore, for effective supervised learning, we introduce the Real-World Well-Aligned Haze (RW^2AH) dataset, which includes a diverse range of scenes from various geographical regions and climate conditions. Experimental results demonstrate that our method surpasses existing state-of-the-art methods across multiple real-world smoke/haze datasets.

Motivation

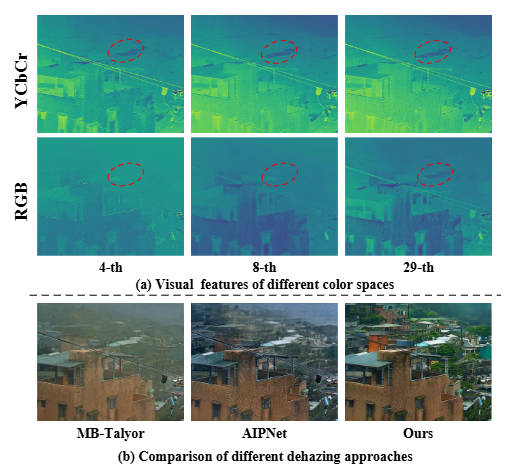

Visual comparison of different color spaces:

(a) RGB features suffer from degradation, resulting in unclear textures, whereas YCbCr features are less impacted by fog and reveal more distinct textures.

(b) RGB models (e.g., MB-Taylor) leave residual haze, while YCbCr models (e.g., AIPNet) struggle with color distortion.

Our approach effectively removes heavy fog while preserving color accuracy.

Method

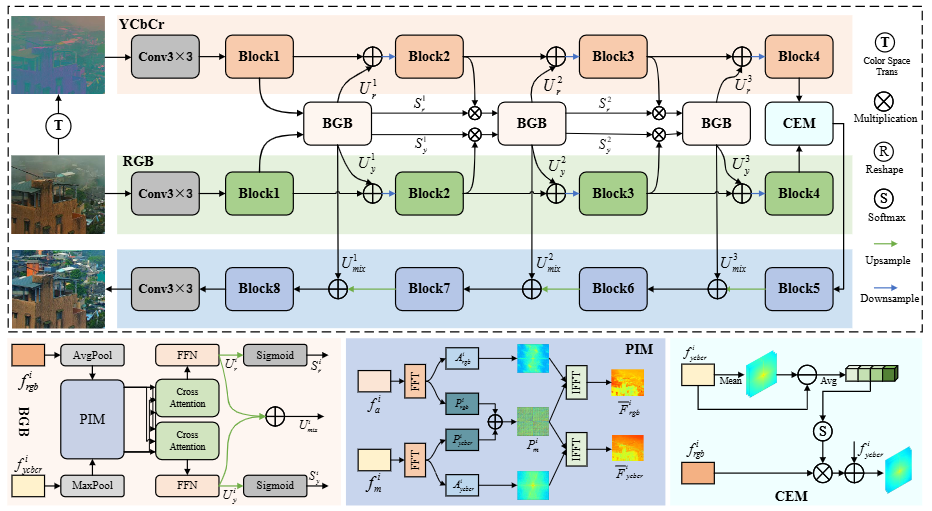

The overall pipeline of our SGDN.

It includes the proposed Bi-Color Guidance Bridge (BGB) and Color Enhancement Module (CEM).

BGB promotes RGB features to produce clearer textures through YCbCr color space in both frequency and spatial domain, while CEM significantly enhances the visual contrast of the images.

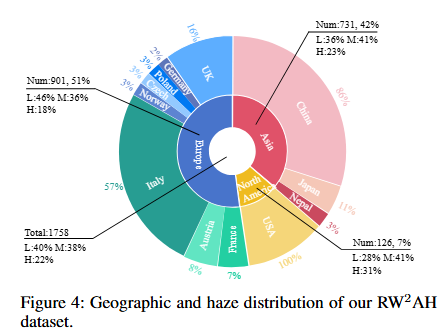

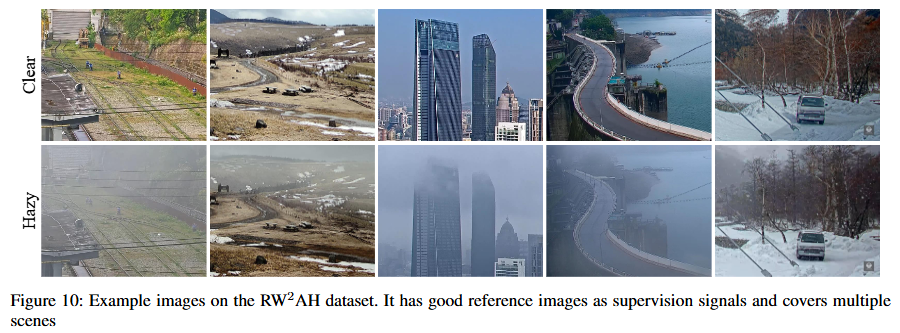

Real-World Well-Aligned Haze Dataset

To enable effective supervised learning, we collect a real-world haze dataset featuring multiple scenes and varying haze concentrations, named the Real-World Well-Aligned Haze (RW^2AH) dataset, with a total of 1758 image pairs. The RW^2AH dataset primarily records haze/clean images captured by stationary webcams from YouTube, with scenes including landscapes, vegetation, buildings and mountains.

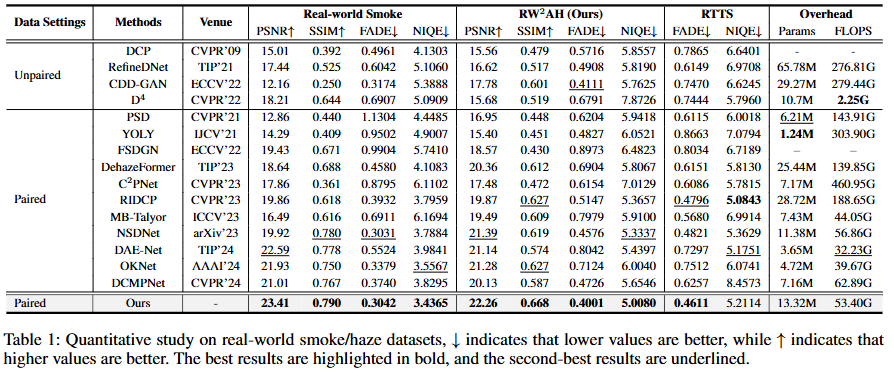

Results

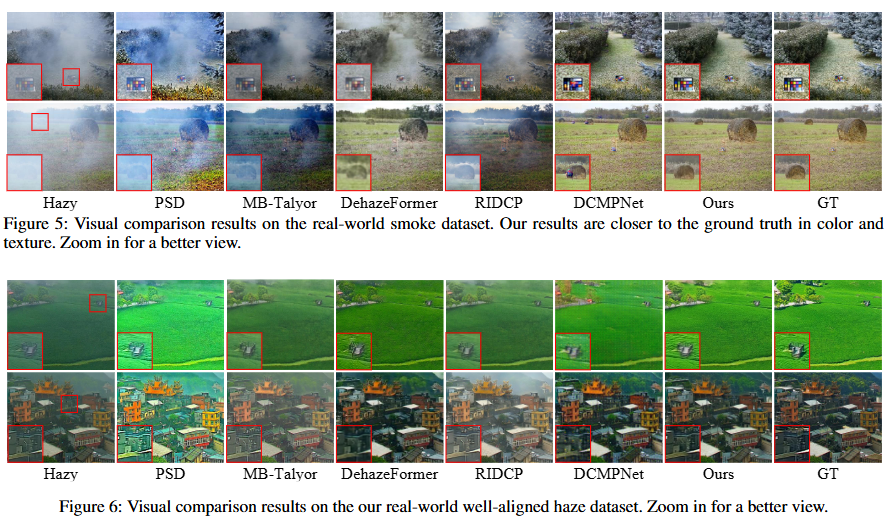

Comparing dehazing results on real-world smoke and haze datasets.

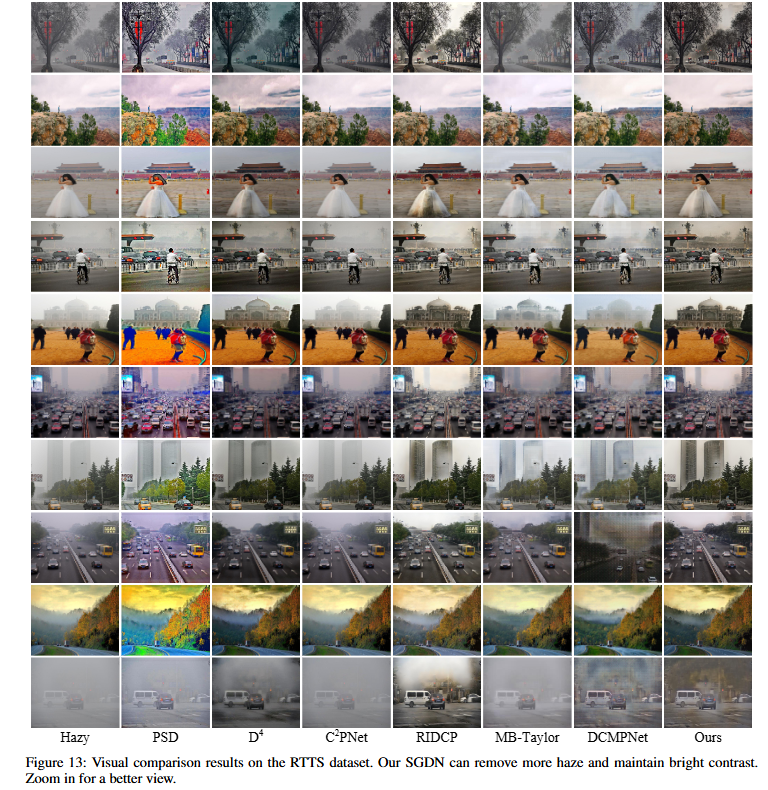

More Quantitative results. We compare our framework with previous state-of-the-art methods on RTTS.

Citation

If you find our work useful in your research, please consider citing:

Acknowledgements

The website template was borrowed from Michaël Gharbi.